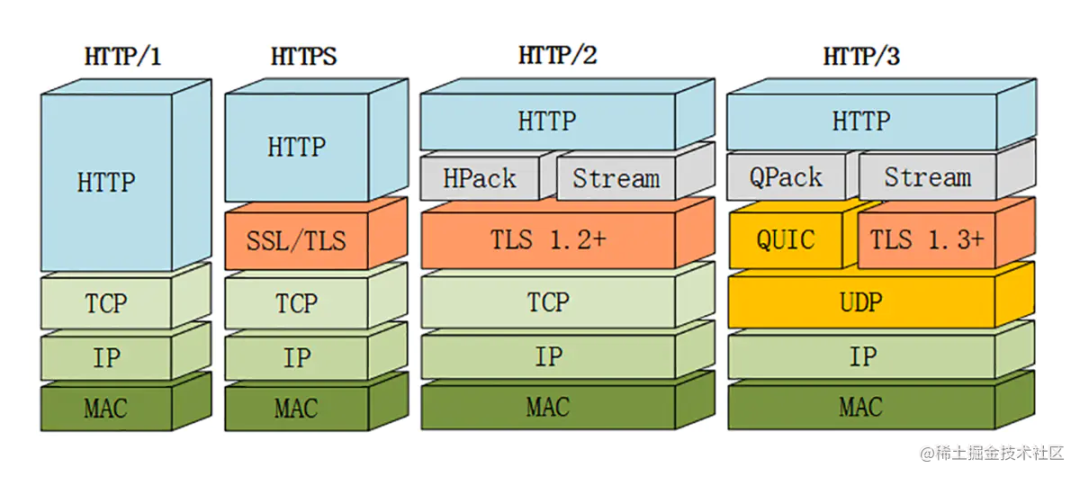

HTTP History - From HTTP/1 to HTTP/3

birth

Speaking of http, you must first understand the "World Wide Web" (WWW) for short.

WWW is based on the client <=> server approach 'using links to jump to the site' and 'transmitting Hypertext Markup Language (HTML)' technology synthesis.

On midsummer night in 1989, Tim Berners-Lee successfully developed the world's first Web server and the first Web client. At this time, all he could do was an electronic phone directory.

The HTTP (HyperText Transfer Protocol) is the basic protocol of the World Wide Web, which formulates the communication rules between the browser and the server.

Commonly used networks (including the Internet) operate on the basis of the TCP/IP protocol suite. And HTTP belongs to a subset of its internals.

HTTP continues to implement more functions, and it has evolved from HTTP 0.9 to HTTP 3.0.

HTTP/0.9

HTTP was not established as a standard at the beginning of its inception, and was formally formulated as a standard in the HTTP/1.0 protocol announced in 1996. Therefore, the previous protocol was called HTTP/0.9.

request has only one line and only one GET command, followed by the resource path.

GET /index.$html$- 1.

The response contains only the file content itself.

<html>

<body>HELLO WORLD!</body>

</html>- 1.

- 2.

- 3.

HTTP/0.9 has no concept of header and no concept of content-type, only html files can be passed. Also since there is no status code, when an error occurs it is handled by passing back an html file containing a description of the error.

HTTP/1.0

With the rapid development of Internet technology, the HTTP protocol is used more and more widely, and the limitations of the protocol itself can no longer satisfy the diversity of Internet functions. Therefore, in May 1996, HTTP/1.0 was born, and its content and functions were greatly increased. Compared with HTTP/0.9, the new version includes the following features:

- Add the version number after the GET line of each request

- Add a status line to the first line of the response

- Add the concept of header to request and response

- Add content-type to the header to transfer files of types other than html

- Add content-encoding to the header to support the transmission of files in different encoding formats

- POST and HEAD commands introduced

- Support long connection (default short connection)

GET /index.html HTTP/1.0

User-Agent: NCSA_Mosaic/2.0 (Windows 3.1)

200 OK

Date: Tue, 15 Nov 1994 08:12:31 GMT

Server: CERN/3.0 libwww/2.17

Content-Type: text/html;charset=utf-8 // 类型,编码。

<HTML>

A page with an image

<IMG src="/image.gif">

<HTML>- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

content

Simple text pages naturally cannot meet the needs of users, so 1.0 adds more file types:

Common Content-Type | ||

text/plan | text/html | text/css |

image/jpeg | image/png | image/svg + xml |

application/javascript | application/zip | application/pdf |

It can also be used in html.

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8" />- 1.

Content-encoding

Since the sending of any data format is supported, the data can be compressed before sending. HTTP/1.0 entered Content-Encoding to represent the compression method of data.

- Content-Encoding: gzip. [Indicates that the Lempel-Ziv coding (LZ77) compression algorithm and the encoding method of the 32-bit CRC check are used].

- Content-Encoding: compress. [Using Lempel-Ziv-Welch (LZW) compression algorithm].

- Content-Encoding: deflate. [Use zlib].

The client sends a request with a message indicating that I can accept two compression methods, gzip and deflate.

Accept-Encoding: gzip, deflate- 1.

The server provides the actual compression mode used in the Content-Encoding response header.

Content-Encoding: gzip- 1.

Disadvantages of HTTP/1.0

- Head-of-Line Blocking, each TCP connection can only send one request. After sending data, the connection is closed. If you want to request other resources, you must create a new connection.

- The default is a short connection, that is, each HTTP request must be implemented using the TCP protocol through three-way handshake and four-way wave.

- Only 16 status codes are defined.

HTTP/1.1

Only a few months after the announcement of HTTP/1.0, HTTP/1.1 was released. So far, the HTTP1.1 protocol is the mainstream version, so that no new HTTP protocol version has been released in the next 10 years.

Compared with the previous version, the main updates are as follows:

- Connections can be reused (keep-alive), saving time from having to open multiple times to display resources embedded in a single original document.

- Added Pipeline, which allows a second request to be sent before the answer to the first request is fully transmitted. This reduces communication latency.

- Chunked mechanism, chunked response.

- An additional cache control mechanism is introduced.

- With the introduction of content negotiation, including language, encoding, and type, clients and servers can now agree on what to exchange.

- The ability to host different domains from the same IP address, thanks to the Host header, allows for server collocation.

keep-alive

Since the process of establishing a connection requires the DNS resolution process and the three-way handshake of TCP, the resources and time required to continuously establish and disconnect the connection to obtain resources from the same server are huge. In order to improve the efficiency of the connection, the timely emergence of HTTP/1.1 The long connection is added to the standard and implemented as the default. The server also maintains the long connection state of the client according to the protocol. Multiple resources on a server can be obtained through this connection with multiple requests.

The following information can be introduced in the request header to tell the server not to close the connection after completing a request.

Connection: keep-alive- 1.

The server will also reply with the same message indicating that the connection is still valid, but at the time this was just a programmer's custom behavior and was not included in the standard in 1.0. The improvement in this is almost double the efficiency improvement between communications.

This also lays the groundwork for pipelining.

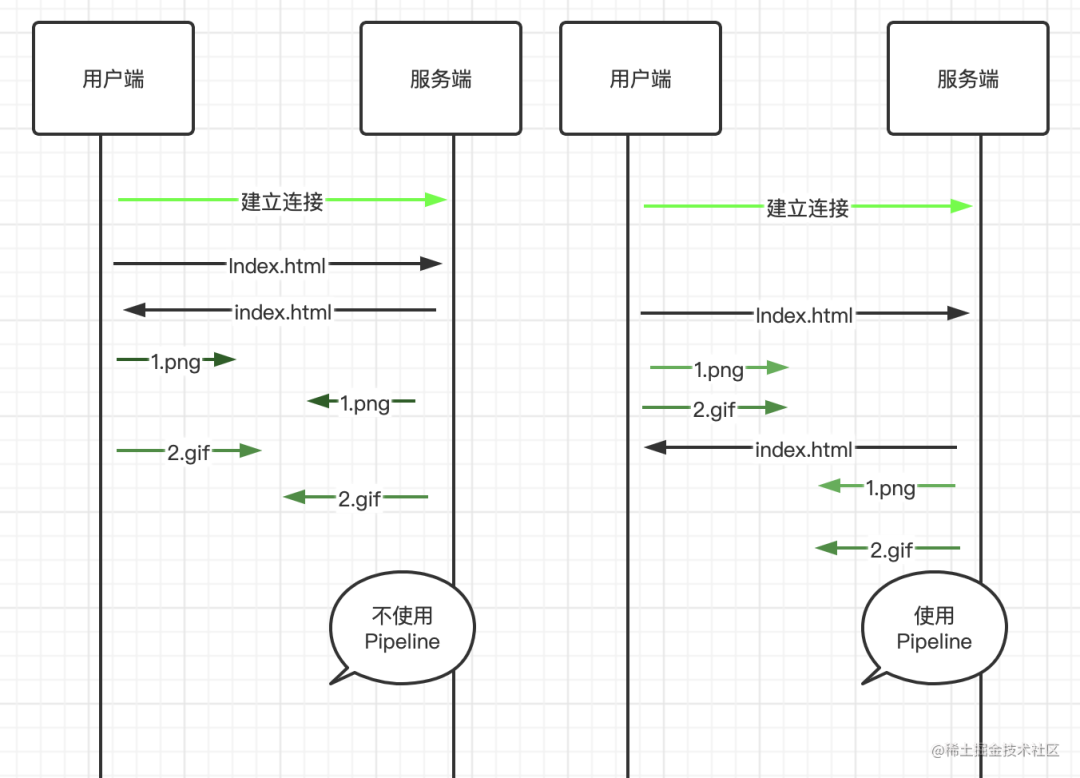

Pipeline

HTTP/1.1 attempts to solve the performance bottleneck through HTTP pipeline technology, and the pipeline mechanism was born. As shown in the figure, the next request can only be made after each response returns a result, and multiple http requests on one connection can be sent continuously without waiting for the response. Technology.

Unfortunately, because HTTP is a stateless protocol, a large or slow response will still block all subsequent requests. Each request cannot know which response is returned to him, and the server can only return according to the order. response, this is head-of-line blocking, which causes this feature to be disabled by default on mainstream browsers, and this problem will be solved in http2.0.

host header field

In HTTP 1.0, each server is considered to be bound to a unique IP address. Therefore, the URL in the request message does not pass the host name (hostname). The new host in 1.1 is used to handle multiple virtual machines on an IP address. host situation.

Added the Host field to the request header field, which is used to specify the domain name of the server. With the Host field, different websites can be built on the same server, which also lays the foundation for the subsequent development of virtualization.

Host: www.alibaba-inc.com- 1.

cache mechanism

Cache can not only improve the access rate of users, but also greatly save traffic for users on mobile devices. Therefore, in HTTP/1.1, many Cache-related header fields were added and a more flexible and richer Cache mechanism was designed around these header fields.

The problems that the Cache mechanism needs to solve include:

- Determine which resources can be cached and access the access policy.

- Determine locally whether the Cache resource has expired.

- Send a query to the server to see if the expired Cache resources have changed on the server.

chunked mechanism

After the link is established, the client can use the link to send multiple requests. The user usually judges the size of the data returned by the server through the Content-Length returned in the response header. However, with the continuous development of network technology, more and more dynamic resources are introduced. At this time, the server cannot know the size of the resource to be delivered before transmission, and cannot inform the user of the resource size through Content-Length. The server can dynamically generate resources and pass them to the user at the same time. This mechanism is called "Chunkded Transfer Encoding", which allows the data sent by the server to the client to be divided into multiple parts. Added "Transfer-Encoding: chunked" header field to replace the traditional "Content-Length.

Transfer-Encoding: chunked- 1.

HTTP caching mechanism

Compared with HTTP 1.0, HTTP 1.1 adds several new caching mechanisms:

Strong cache

Strong cache is the cache that the browser hits first and has the fastest speed. When we see (from memory disk) after the status code, it means that the browser has read the cache from the memory. When the process ends, that is, after the tab is closed, the data in the memory will no longer exist. The negotiation cache lookup is only performed when the strong cache is not hit.

Pragma

The Pragma header field is a product of HTTP/1.0. Currently only defined for backward compatibility with HTTP/1.0. It now only appears in the request header, indicating that all intermediate servers are required not to return cached resources, which has the same meaning as Cache-Control: no-cache.

Pragma: no-cache- 1.

Expires

Expires only appears in the response header field, indicating the timeliness of the resource. When a request occurs, the browser will compare the value of Expires with the local time. If the local time is less than the set time, it will read the cache.

The value of Expires is in standard GMT format:

Expires: Wed, 21 Oct 2015 07:28:00 GMT- 1.

It should be noted here that when both Cache-Control: max-age=xx and Expires exist in the header, the time of Cache-Control: max-age shall prevail.

Cache-Control

Due to the limitations of Expires, Cache-Control comes on stage. Here are a few commonly used fields:

- no-store: The cache should not store anything about client requests or server responses.

- no-cache: Force the cache to submit the request to the origin server for verification before publishing the cached copy.

- max-age: Relative expiration time, in seconds (s), which tells the server how much resources are valid without requesting the server.

Negotiate cache

When the browser does not hit the strong cache, it will hit the negotiation cache. The negotiation cache is controlled by the following HTTP fields.

Last-Modified

When the server sends the resource to the client, it will add the last modification time of the resource to the entity header in the form of Last-Modified: GMT and return it.

Last-Modified: Fri, 22 Jul 2019 01:47:00 GMT- 1.

After the client receives it, it will mark the resource information, and when it re-requests the resource next time, it will bring the time information to the server for checking. If the passed value is consistent with the value on the server, it will return 304, indicating that The file has not been modified. If the time is inconsistent, the resource request is made again and 200 is returned.

priority

Strong cache --> Negotiated cache Cache-Control -> Expires -> ETag -> Last-Modified.

Five new request methods have been added

OPTIONS: Pre-requests made by the browser to determine the security of cross-origin request resources.

PUT: Data sent from the client to the server replaces the contents of the specified document.

DELETE : Requests the server to delete the specified page.

TRACE: echoes the request received by the server, mainly for testing or diagnosis.

CONNECT: Reserved in the HTTP/1.1 protocol for proxy servers that can change connections to pipes.

Add a series of status codes

You can refer to the complete list of status codes

Http1.1 defect

- High latency reduces page loading speed (the network latency problem is as long as the bandwidth cannot be fully utilized due to head-of-line blocking).

- The stateless feature brings huge Http headers.

- Clear text transmission is not secure.

- Server push messages are not supported.

HTTP/2.0

Depending on the development of the times web pages become more complex. Some of them are even apps themselves. More visual media is displayed, and the number and size of scripts that increase interactivity also increases. More data is transferred through more HTTP requests, which introduces more complexity and overhead to HTTP/1.1 connections. To this end, Google implemented an experimental protocol SPDY in the early 2010s. In view of the success of SPDY, HTTP/2 has also adopted SPDY as the blueprint of the whole scheme for development. HTTP/2 was officially standardized in May 2015.

Differences between HTTP/2 and HTTP/1.1:

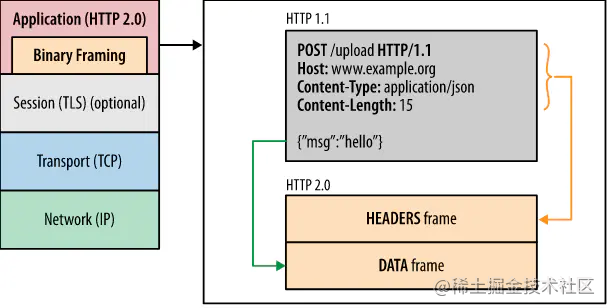

- Binary frame layer.

- Multiplexing protocol. Parallel requests can be made over the same connection, thereby removing the constraints of the HTTP/1.x protocol.

- Header compression algorithm HPACK. This eliminates the duplication and overhead of transferring data since some requests are generally similar within a set of requests.

- It allows the server to understand HTTP/2 and HTTP/1.1 by populating the client cache with data through a mechanism called server push.

Header compression

The header of HTTP1.x has a lot of information, and it has to be sent repeatedly every time. HPACK, which is specially tailored for HTTP/2, is an extension of this idea. It uses an index table to define common HTTP headers, and both parties cache a header fields table, which not only avoids the transmission of repeated headers, but also reduces the size to be transmitted.

It seems that the format of the protocol is completely different from that of HTTP1.x. In fact, HTTP2 does not change the semantics of HTTP1.x, but just re-encapsulates the header and body of the original HTTP1.x with a frame.

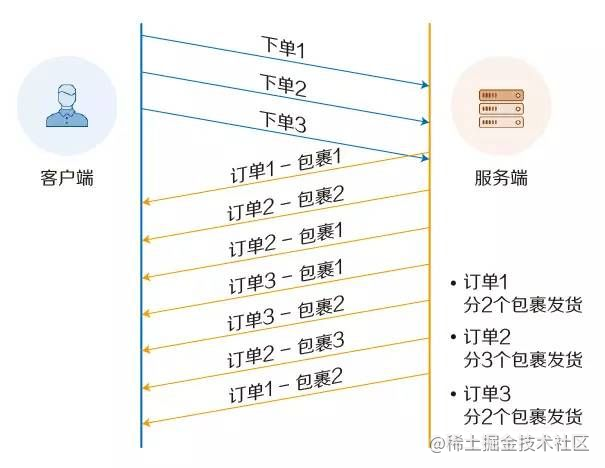

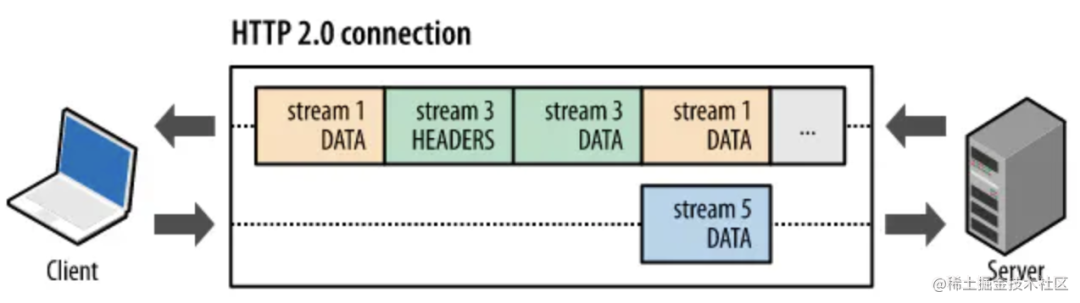

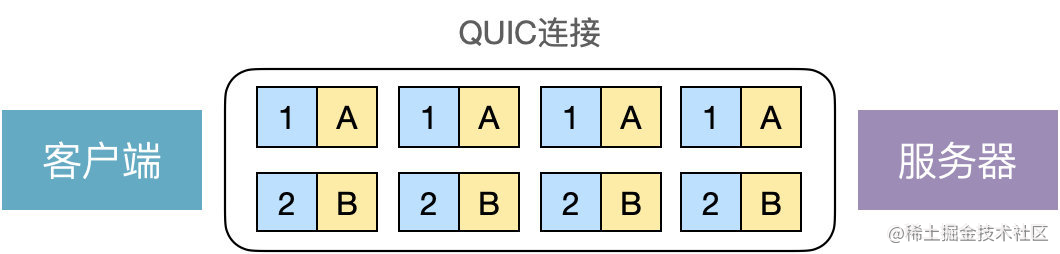

multiplexing

In order to solve the problem of head-of-line blocking in HTTP/1.x, HTTP/2 proposes the concept of multiplexing. That is, a request/response is regarded as a stream, and a stream is divided into multiple types of frames (such as header frame, data frame, etc.) according to the load, and frames belonging to different streams can be mixed and sent on the same connection, so that The ability to send multiple requests at the same time is implemented, and multiplexing means that head-of-line blocking will no longer be a problem.

Although HTTP/2 solves the head-of-line blocking at the HTTP layer through multiplexing, there is still head-of-line blocking at the TCP layer.

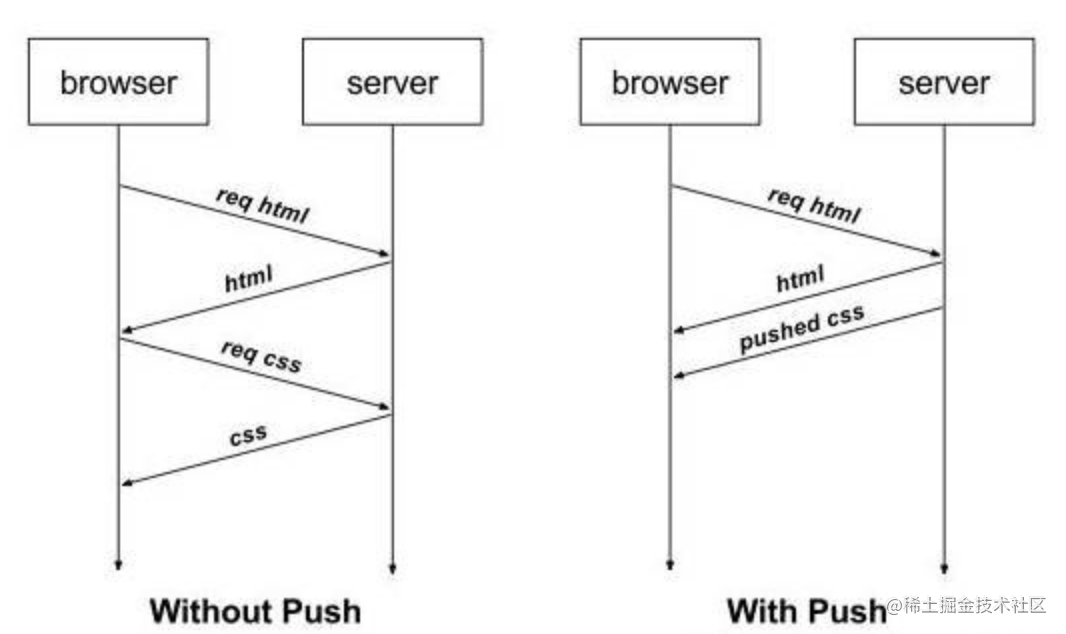

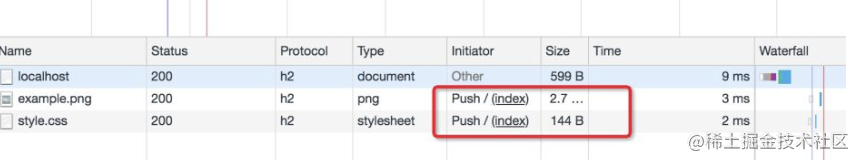

server push server push

Services can actively send messages to clients. When the browser just requests HTML, the server will actively send some static resources such as JS, CSS and other files to the client, so that the client can load these resources directly from the local without going through the network. Request again, so as to save the process of the browser sending the request request.

Use server push

Link: </css/styles.css>; rel=preload; as=style,

</img/example.png>; rel=preload; as=image- 1.

- 2.

You can see that the push status in the server initiator indicates that this is an active push by the server.

For actively pushed files, it is bound to bring redundant or already existing files in the browser.

The client uses a neat Cache Digest to tell the server what is already in the cache, so the server will know what the client needs.

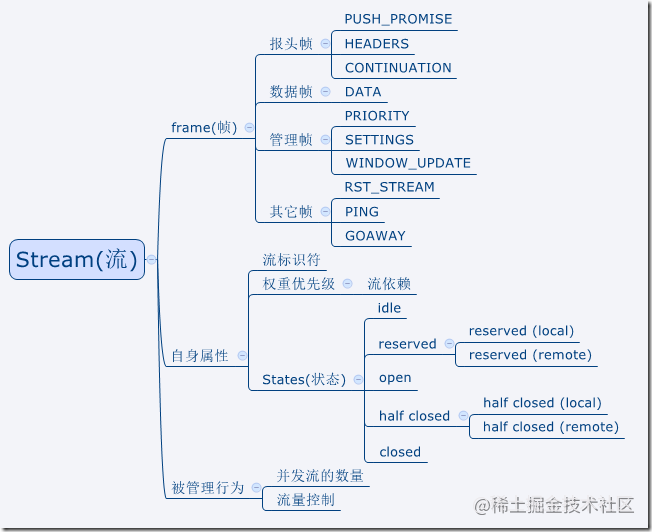

flow

The server and the client are used to exchange independent bidirectional sequences of frame data within the HTTP/2 connection. HTTP/2 virtualizes multiple Streams on a single TCP connection, and multiple Streams implement multiplexing of a TCP connection. It utilizes the transmission link to achieve the optimization of transmission performance within limited resources.

All communication is established over a single TCP connection, which can pass a large number of bidirectional streams.

Each stream has a unique flag and priority.

Each message is a logical request and corresponding message. Consists of one or more frames.

Frames from different streams can be associated and assembled by flags in the frame header.

The concept of stream is proposed to realize multiplexing, and realize the transmission of data of multiple service units at the same time on a single connection.

binary frame layer

In HTTP/1.x, users establish multiple TCP connections in order to improve performance. This will lead to head-of-line blocking and important TCP connections cannot be obtained stably. The binary frame layer in HTTP/2 allows request and response data to be split into smaller frames, and they are encoded in binary (http1.0 is based on text format). Multiple frames can be sent out of order, and can be reassembled according to the stream in the frame header (for example, each stream has its own id).

Obviously, this is very friendly to binary computers. There is no need to convert the received plaintext message into binary, but to directly parse the binary message to further improve the efficiency of data transmission.

Each frame can be regarded as a student, the flow is a group (the flow identifier is the attribute value of the frame), and the students in a class (a connection) are divided into several groups, each group is assigned different specific tasks, multiple Group tasks can be performed concurrently within the class at the same time. Once a group task is time-consuming, it will not affect the normal execution of other group tasks.

Finally, let's take a look at the improvement brought by http2 under ideal conditions.

shortcoming

- TCP and TCP+TLS connection establishment delay (handshake delay).

- The TCP head-of-line blocking in http2.0 has not been completely solved. If any data packet on both sides of the connection is lost, or the network of either party is interrupted, the entire TCP connection will be suspended, and the lost data packet needs to be retransmitted, thereby blocking the All requests in a TCP connection, but in poor or unstable network conditions, using multiple connections performs better.

HTTP/3.0 (HTTP-over-QUIC)

Under limited conditions, it is quite difficult to solve the problem of head-of-line blocking under TCP, but with the explosive development of the Internet, higher stability and security need to be satisfied. ) held the first QUIC (Quick UDP Internet Connections) working group meeting, and developed a low-latency Internet transport layer protocol based on UDP. HTTP-over-QUIC was renamed HTTP/3 in November 2018 .

0-RTT handshake

In tcp, the client sends a syn packet (syn seq=x) to the server, the server receives and needs to send (SYN seq =y; ACK x+1) packet to the client, and the client sends an acknowledgment packet ACK to the server (seq = x+ 1; ack=y+1), at this point the client and server enter the ESTABLISHED state and complete the three-way handshake.

1-RTT

- The client generates a random number a and then selects a public encrypted number X , calculates a*X = A, and sends X and A to the server.

- The client generates a random number b, calculates b*X = B, and sends B to the server.

- The client uses ECDH to generate the communication key key = aB = a(b*X).

- The server uses ECDH to generate the communication key key = bA = b(a*X).

sequenceDiagram

客服端->>服务端: clinet Hello

服务端-->>客服端: Server Hello- 1.

- 2.

- 3.

Therefore, the key here is the ECDH algorithm. a and b are the private keys of the client and the server, which are not public. Even if you know A and X, you cannot deduce a through the formula A = a*X, which ensures the private key. security.

0-RTT

0-RTT means that the client caches the ServerConfig (B=b*X), and the next time the connection is established, the cached data is used to calculate the communication key:

sequenceDiagram

客服端->>服务端: clinet Hello + 应用数据

服务端-->>客服端: ACK- 1.

- 2.

- 3.

- Client: Generate a random number c, select the public large number X, calculate A=cX, send A and X to the server, that is, after the Client Hello message, the client directly uses the cached B to calculate the communication key KEY = cB = cbX, encrypts sending application data.

- Server: Calculate the communication key key = bA = b(c*X) according to the Client Hello message.

The client can send application data directly through the cached B to generate the key without going through the handshake.

Let’s think about another question: Assuming that the attacker records all the communication data and public parameters A1, A2, once the random number b (private key) of the server is leaked, all the previously communicated data can be cracked.

To solve this problem, a new communication key needs to be created for each session to ensure forward security.

orderly delivery

QUIC is based on the UDP protocol, and UDP is an unreliable transmission protocol. QUIC has an offset field (offset) in each data packet, and the receiving end can sort the asynchronously arriving data packets according to the offset field. Guaranteed order.

sequenceDiagram

客服端->>服务端: PKN=1;offset=0

客服端->>服务端: PKN=2;offset=1

客服端->>服务端: PKN=3;offset=2

服务端-->>客服端: SACK = 1,3

客服端->>服务端: 重传:PKN=4;offset=1- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

head of line jam

The reason why HTTP/2 has head-of-line blocking at the TCP layer is that all request streams share a sliding window, while QUIC assigns an independent sliding window to each request stream.

Packet loss on A's request stream will not affect data sending on B's request stream. However, for each request flow, there is also a head-of-line blocking problem, that is, although QUIC solves the head-of-line blocking at the TCP layer, there is still head-of-line blocking on a single flow. This is the head-of-line blocking multiplexing that QUIC claims.

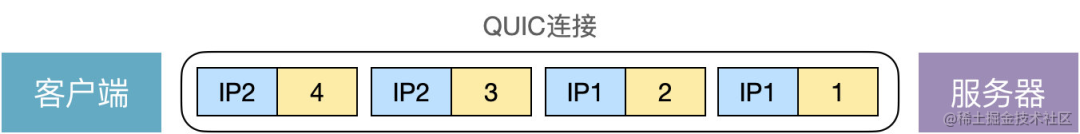

connection migration

Connection migration: When the client switches the network, the connection with the server will not be disconnected, and the communication can still be performed normally, which is impossible for the TCP protocol. Because the connection of TCP is based on 4-tuple: source IP, source port, destination IP, and destination port, as long as one of them changes, the connection needs to be re-established. However, the connection of QUIC is based on the 64-bit Connection ID. The network switch does not affect the change of the Connection ID, and the connection is still logically connected.

Suppose the client first uses IP1 to send packets 1 and 2, then switches the network, changes the IP to IP2, and sends packets 3 and 4. The server can determine that these 4 packets are from the same a client. The fundamental reason why QUIC can achieve connection migration is that the underlying UDP protocol is connectionless.

Finally, let's take a look at the upgrade of http.