How to Implement a Secure Service Mesh translation

For the purpose of writing this article, I will introduce Kuma. Kuma is an open source solution built on Envoy that acts as a control plane for microservices and service meshes. It is compatible with Kubernetes and virtual machines and can support multiple meshes in a cluster.

There are other open source and managed service meshes to choose from, such as Istio, Linkerd, and Kong Mesh.

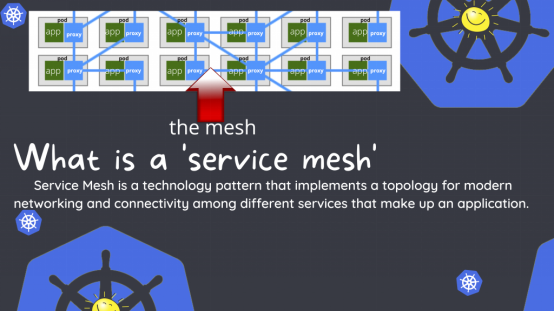

Why use a service mesh?

One of our main reasons for using a service mesh is to get mutual Transport Layer Security (mTLS) between internal pod services for security. Additionally, using a service mesh has many benefits as it allows workloads to be linked across multiple Kubernetes clusters, or to run standard bare metal applications connected to Kubernetes. It provides tracking and logging of connections between pods, and can output connection endpoint health metrics to Prometheus.

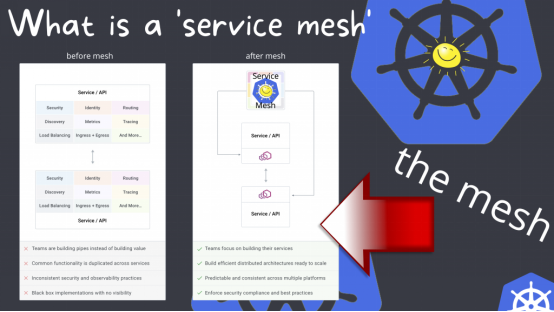

This diagram shows what the workload looks like before implementing a service mesh. In the example on the left, the team spends time building pipelines rather than building products or services, common functionality is replicated across services, there are inconsistent security and observability practices, and there are black-box implementations with no visibility.

On the right, after implementing a service mesh, the same team can work on building products and services. They are able to build scalable and efficient distributed architectures where observability is consistent across multiple platforms, making it easier to implement security and compliance best practices.

How Kuma Service Mesh Architecture Works

The magic of moving your application pod's socket communication from plaintext to mTLS is in the Kuma control plane, sidecars, and Kuma Container Network Interface (CNI). When developers incorporate some changes, add new services to the application, Kuma transparently detects and injects the required bits to automatically proxy traffic across its own network data plane.

The Kuma service mesh consists of three components:

- Kuma CNI: This CNI plugin can identify user app pods with sidecars based on annotations to set up traffic redirection. It does this during the network setup phase of the pod lifecycle, when each pod is scheduled in Kubernetes through a process called mutating webhook.

- Kuma-sidecar: It runs on every instance of the exposed service. These services delegate all connectivity and observability issues to an out-of-process runtime environment that will be on the execution path of each request. It proxies all outbound connections and accepts all inbound connections. Of course, it also enforces traffic policies at runtime, such as routing or logging. With this approach, developers don't have to worry about encrypted connections and can focus entirely on services and applications. It's called a sidecar proxy because it's another container running alongside the service process on the same pod. Each running service instance will have an instance of the sidecar proxy; again because all incoming and outgoing requests and their data are always transmitted through the sidecar proxy, this is also called the Kuma data plane (DP) because it is located on the network data path.

- Kuma Control Plane (kuma-cp): This is a distributed executable written in GoLang that can run on Kubernetes, issue data plane certificates, and coordinate data plane (DP) state within the Kubernetes API. You can use Kuma Custom Resource Definitions (CRDs) to configure Kuma settings and policies, and sidecars automatically pick up changes from the control plane.

Epilogue

Today's service mesh topology closely resembles the Enterprise Service Bus (ESB) architecture of the 1990s and 2000s. Instead of directing proxy traffic along routes based on business policies like ESB architectures do, with a mesh, you have the freedom to connect applications, and the mesh manages routes and policies from the top down.

In my opinion, the biggest reason the ESB architecture isn't more popular in the industry is that it has to meet monolithic codebase requirements and the eventual dependency management issue that is often encountered. You have dozens of projects sharing dependencies to manage objects on the ESB, which becomes a big headache for software management.

Service mesh technology reduces complexity by keeping it separate from the code. It allows developers to move the complexities of security, reliability, and observability out of the application stack as part of the infrastructure environment.

Original title: Implementing a Secure Service Mesh , Author: Jonathan Kelley